This guide will walk you through the process of fine-tuning Llama 2 with LoRA for Question Answering. The steps to fine-tune LLaMA 2 using LoRA is the same as of SFT In the code when loading the. How to Fine-Tune Llama 2 Using LoRA RAG with Llama 2 and Learn about CLIP and ImageBind Plus. Notes on fine-tuning Llama 2 using QLoRA A detailed breakdown Ogban Ugot Follow 23 min read Sep. To successfully fine-tune LLaMA 2 models you will need the following. The main objective of this blog post is to implement LoRA fine-tuning for sequence classification. LoRA-based fine-tuning offers a performance nearly on par with full-parameter fine-tuning when. Torchrun --nnodes 1 --nproc_per_node 4 llama_finetuningpy --enable_fsdp --use_peft --peft_method..

How we can get the access of llama 2 API key I want to use llama 2 model in my application but doesnt know where I. For an example usage of how to integrate LlamaIndex with Llama 2 see here We also published a completed demo app showing how to use LlamaIndex to. On the right side of the application header click User In the Generate API Key flyout click Generate API Key. Usage tips The Llama2 models were trained using bfloat16 but the original inference uses float16 The checkpoints uploaded on the Hub use torch_dtype. Kaggle Kaggle is a community for data scientists and ML engineers offering datasets and trained ML models..

Our fine-tuned LLMs called Llama 2-Chat are optimized for dialogue use cases Our models outperform open-source chat models on most. Fine-tune Llama 2 with DPO Published August 8 2023 Update on GitHub kashif Kashif Rasul ybelkada Younes Belkada lvwerra Leandro von. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and. In this blog post we will look at how to fine-tune Llama 2 70B using PyTorch FSDP and related best practices. Fine-tuning with PEFT Training LLMs can be technically and computationally challenging In this section we look at the tools available..

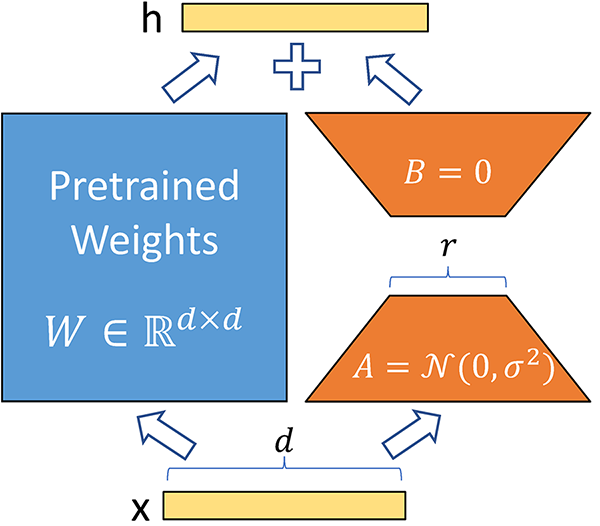

In this guide well show you how to fine-tune a simple Llama-2 classifier that predicts if a texts. It contains working end-to-end dataflows that start with some tuning data tune the base LLaMA 2 model with that data. This articles objective is to deliver examples that allow for an immediate start with Llama 2 fine. Learn how to fine-tune Llama 2 with LoRA Low Rank Adaptation for question answering. This jupyter notebook steps you through how to finetune a Llama 2 model on the text. For details on formatting data for fine tuning Llama Guard we provide a script and sample usage here..

Comments